MASIC: INFLUENCE OF COMPUTER SCIENCES ON THE DEVELOPMENT OF MEDICAL INFORMATICS

The intensive development of science and technology led to the constant improvement of the architecture of computers and the extent of their operational capabilities. From the construction of the first electronic computers in the last century up to nowadays, computers passed through several phases of development, and now we are awaiting the upcoming generation of so-called neuro-computers.

The first device for calculations called Mark I (“Automatic Sequence controlled Cal- culture”) was constructed by Howard Aiken, a Harvard scientist for the International Business Machines Corporation (IBM), established in 1911, by founder Charles Ran- left Flint, at Endicott, New York, U.S. This device performed automatic calculations from the beginning to the end. For this reason, the majority of scientists consider this to be the first electromechanical computer that performed operations without human intervention (1-3).

In 1943, John Prosper Eckert and John W. Mauchley, from Pennsylvania University in Philadelphia, constructed the device ENIAC (“Electronic Numerical Integrator and Calculator”) which weighed 30 tons and had 18.000 electronic tubes. ENIAC occupied a lot of space, around 1.500 square meters. Although ENIAC was, in a technological sense a bit below Mark I, it was still about a thousand times quicker.

Related Articles:

- Traditional Healing in Treatment of Diseases in the Past in Bosnia and Herzegovina

- Masic: 150 Years of Organized Health Care Services in Bosnia and Herzegovina

- Masic: Historical Background of Medical Informatics Development – Preface

This machine, and those similar to it, were exceptionally big if we apply nowadays standards, they produced huge quantities of heat and were very expensive in construction, and operation.

Malfunctioning was also frequent. The machines required special rooms, and the speed of work was very slow compared to the machines we have today. For example, one addition lasted about 0.6 milliseconds, and multiplication took about 15 milliseconds. The price of these machines was relatively high, there were great costs for the qualified personnel and experts, and programmers were deficient, which lead that these machines being reserved for bigger and more complex tasks of manipulating huge amounts of data for both scientific and engineering purposes (1).

John von Neumann proposed that operational instructions should be included inside the computer memory, besides the data memory, which lead to the production of EDVAC (“Electronic Discrete Variable Automatic Computer”) in 1946, and also the production of UNIVAC (“Universal Automatic Computer”) in 1952 as the first computer for the commercial use with UNIVAC II, IBM 704, IBM 705, etc.

This was a period of fantastic development of computers. We emphasize that von Neuman was the first who precisely described all the elements and functions of the computer system, and this project is considered a cornerstone for the series of computer systems of different performances in the future generations of computers (1).

The first computers of the second generation were built in 1957, and the majority in the period from 1959 till 1964. Their characteristic is that instead of electronic tubes they used transistors and semi-conductor diodes. The appearance of transistors in electronics provoked the revolution in the building of widely available computers.

This significantly reduced the requests for size, space, costs of electricity, and efficiency. The speed of these computers is significantly greater and multiplication took about 20 microseconds. It is specific that the computers of the second generation used a small magnetic core for the construction of the primary memory. This was the time of general use of computers, and there were more those interested in training to become pro- professional programmers.

The people who worked on these machines inspired awe in the general public. In this period several programming languages were introduced, such as ALGOL and COBOL. Some of the computers from this generation are RCA 501, IBM 7070, 7090, HONEYWELL 800, IBM 1400, IBM 1600, CDC 160, NCR 500, and others (1).

The computers of the third generation (1964) operated on the principles of monolithic technique and integrated circuits. The period of the building of these computers lasts until 1971. The new technique led to further micro miniaturization so that on the tile of small dimensions there was a great number of various elements (transistors and diodes). For example, on the tile sized 1-2cm there were around 30 different electronic elements.

The velocity of work of these computers is great and they could calculate around 10 million additions in a minute. The computers of this generation use numerous languages for programming and multiprogramming. The basic characteristic is that they could simultaneously perform more jobs at once, using the “divided time” technique for working.

The deficiency of the previous computers is that there is a time when the central processors unit (“Control Processing Unit – CPU”) is inactive and waiting for some input or output device to finish its task. This was converted so that the CPU serves more jobs at once. Namely, the CPU, which is the “brain and heart” of every computer, is now divided between concurrent programs serving them, and simultaneously follow-up and managing the work of the more output/input (I/O) units.

The difference from the previous generation, where the job had to be transferred on punched paper tape or on punched cards which were previously prepared far from the computer, while the user waited for the results for several hours or days, is also that this generation of computers was more available.

They had the strength and the capacity to perform complex tasks, perform extensive tasks, and made sense of operational systems which enabled much easier performing of the schedule of work. The resources within the system could divide without mutual interrupting, and the users had the “direct approach” to the computer through a monitor with the option of communicating with the computer from distant locations.

This favored the production of various input-output units, different screens, and memory devices (disks) on which millions of data were easily available to the user. Some of the computers from this generation are IBM 360, RCA SIE- MENS 4004, UNIVAC 9000, CDC 6600, HONEYWELL 200, and others.

The computers of the fourth generation were built in 1971 on the principle of so-called “Large Scale Integration” (LSI). The basis of this technique are tiles sized 3×3 millimeters and similar, with 100-200 different electronic elements placed on 1mm. This al- lowed even greater miniaturization, are created devices of great possibilities, with the costs being reduced. The computers of this generation operated in nanoseconds with approximately 10-15 million operations in a second.

These computers have a large capacity of central memory, from one to several gigabytes. Regardless of the significant capacity of the large computers, they still could not answer the increased requests of users. Therefore, the development of the minicomputer systems with hardware and software (“operational systems”) was pushed with significant reduction of costs, from the ground change of the conceptions of the development and applications of the informational systems in the sense of their decentralization.

The decentralization, which is the distribution into the network terminals and of PC significantly, elevates the management of the computer equipment and transfers the management of organizations to the lower level. The computers from these generations have simplified internal structure, the length of “words” is shorter and it uses simplified logic in “ad- dressing the data” placed in the memory of the computer (1).

The notion of the length of the word reflects the potential possibilities of a computer. The majority of large computers used 32 elements for representing one word (“32-bits computers”), some even 48 bits and longer words. Large computers also have complex operation systems (in fact “the computer programs”) that classify and manage different tasks. Mini computers of this generation are constructed for “16-bits words” and could not operate with great numbers in the frame of an instruction cycle (theoretically this requires more time to process the same problem). However “16-bits mini- computers”, significantly cheaper and slower, with the operating system adapted for the definite task, can manage it better and quicker than large computers. The greatest producers of minicomputers of the sixties and seventies are (besides IBM), Digital Equipment Corporation, Data General, NCR, and others.

The typical representatives of these generations of computers are IBM 370, Siemens 7700, Univac 100, Honeywell 66, and others. By the end of the seventies, the producers of large computers begin to produce 32-bit minicomputers and widen the possibilities of their operational systems. The use of computers becomes cheaper, and the number of trained skilled people abruptly grows which leads to wider applications of computers.

The progress in the field of “integrated circuits” (created by Jack Kilby in 1958) leads to the development of microprocessors which use the input/output processors in the frame of large computers and also in small minicomputers for the management with a greater number of local terminals, and for their connection in large networks (3). The significantly perfected microelectronics enables the rise of the mighty logic functions in the frame of the small Silica components – of the integrated circuits or microchips. The logic device can be programmed and all its components are found on one unique Silica chip, called the microprocessor.

The microprocessors are being developed as process units of the simple structure for integration into chapter microcomputers. This provoked the revolution in the development of mini and microcomputer systems because microprocessors became the central processor units for the new type of computers intended for individuals. The creator of the first microprocessor is IBM (Intel 8008), and the first personal computer appeared in 1975 (Altair 8800). In 1977 the market offered computers made by Apple Computer inc., Commodores Business Machines, Tandy-Radio Shack, and others.

First microcomputers which use microprocessors with 8-bits were available on the market for both professional organizations and individuals (mainly programmers). In these years we already had mighty machines with the possibilities of managing big libraries. “The characteristic of these machines is that they are projected for more us- able use and have the possibilities of the simultaneous performance of several various programs or applications” (1). Among the different products in the domain of micro-computers, we differ: “the pocket computer, laptop/notebook, the personal and small general-purpose computers” (1). In the literature, the term “the personal computer” and “microcomputer” are often used as synonyms (1, 3).

The later microcomputer for the multi-business application used 16-bits and memory locations could be addressed with a 32-bits binary address system. At the time Intel announced detailed information about its first 64-bit processor called Itanium (Marced). Itanium was to work on 800 MHz and was announced to be able to process about 6.4 billion operations according to Intel’s words was about ten times quicker than the RISC processor and usual 32-bits instructions would perform equally fast as the Pentium III processors. The nucleus itself contained 25.4 million transistors and the integrated 4 MB of the three-degree CACHE memory had even 320 million transistors.

Pentium III processors with their 256 KB CACHE memory had only 28 million transistors. The first chipset which was to support Itanium enabled the parallel work of 512 processors and 64 GB SDRAM memory. With the corresponding software, they could successfully serve more users. On the basis, we have the structure developed by John von Neuman and is called “von Neumann architecture” (1).

The fifth generation of computers enables even more simple communication between humans and computers. The fifth generation has the advantages of computers – the speed and reliability of counting and memory and is similar to the advantages of humans when they make decisions and act in new and unpredictable situations.

The computer of the fifth generation work similarly to humans, that is they work as “intelligent”. They solve problems by means of the expert systems which support the work of the experts in a field (for example, in the diagnosing of the disease, deciding on the therapy and similar), by which the computer helps that he explains why and on the basis of what he decided for the definite solution (for the given diagnosis or the choice of the definitive therapy).

The breakthrough of the computer and information technologies in all segments of society led to the need for computer and information technologies. The knowledge of information technology is now part of general literacy. Computer literacy does not require comprehensive and detailed knowledge of electronics or programming.

The electronic computer is the mean by which we can more successfully solve problems. The electronic computer is probably one of the most important inventions in the second half of the previous century. The expansive breakthrough of computer technologies into all spheres of human work characterizes the new wave of great changes which often is called ‘the computer revolution’ (1). The intensive development of electronic technology in this century enabled the construction of such machines which besides arithmetic operations can perform also more complex logic operations by means of which it is possible to quickly easily and reliably solve such tasks which up to the invention of the computer was impossible to solve by the standard way of processing.

Practically is proven that computers have an extraordinary ability to process a great number of data in a short time period. Personal computers (PC) today are widely used in all segments of society. They are used in two fields: business bookkeeping and household accounting. It is true, that today are much more convent for use in household bookkeeping, but is very wide also their application in business bookkeeping, by which the segment of health care is one of the most significant (‘the PC are widely used individually, in the network or as the intelligent terminals on the great systems’) (1, 3).

Some computers are built only for specific tasks such as monitoring the vital functions of patients in medicine or controlling satellites in space. It is usually not possible to modify the purpose of a computer. According to the type the computers are divided into ‘analogs and digital. The analog computer ‘works on the principle of the analogy of the different physical processes,’ and is in the state to memorize the different sizes by means of the different values of the electric current strength in the individual points inside the computer.

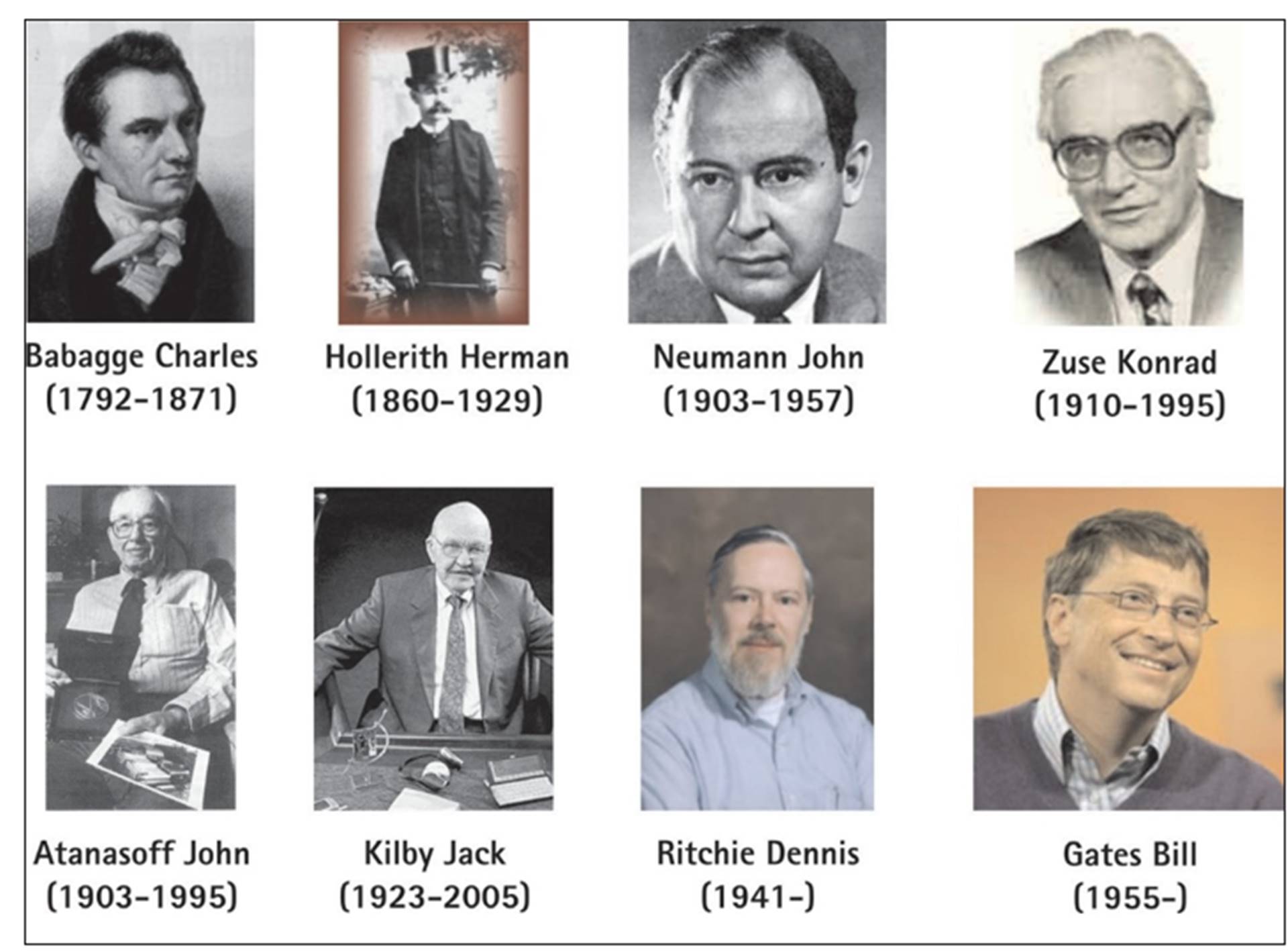

Charles Babagge (1792-1871), was the inventor of “the differential machine” that could add and subtract and the “analytical machine” the first mechanical programmable computer.

Herman Hollerith (1860-1929), the constructor of an electrical tabulating machine. The machine could punch, read and sort cards and are produced in his own corporation – International Business Machines – IBM, built up in the year 1896.

John von Neumann (1903-1957), create the IAS machine, a software version of EDVAC that used binary arithmetic and programs in memory of computer presented in digital form.

Konrad Zuse (1910-1995), create a series of automatic computing machines based on the technology of electromagnetic relays.

John Vincent Atanasoff (1903-1995), made in collaboration with Berry Kliford ABC computer in 1939. (Atanasoff Berry Computer), first digital computer ever made. He had patents for more than 30 inventions.

Jack Kilby (1923-2005), in September 1958 created the first microchip (Miniature Electronic Circuit). He got Nobel Prize for Physics in the year 2000 for this and other 60 patents in this field.

Dennis Ritchie (1941-2011), in collaboration with Ken Tomphson made the first version of the operating system UNIX in Bell Laboratory, USA that worked on PDP -7 computer using Assembler for code creation. First, the licensed version of UNIX, created on a PDP-11 computer, was realized in the year 1976.

Bill gates (1955-), founder of Microsoft Corporation, made a great contribution to the fact that Internet has become a global world network.

WHAT IS THE INFORMATICS?

The answer to this question is neither facile nor unambiguous, as informatics being a young scientific discipline, still has no unique definition. There are at least three reasons; terminological disagreements, various approaches in the comprehension of informatics notions, and region-wide enough to study informatics problems. Therefore the informatics definition differentiates from one user to another and these differences are most prominent between west and east informatics theoreticians (1, 4, 5).

The term “Informatics” in the literature was included by Philippe Dreyfus in 1962, who combined the french words: “information” and “automatic” because at that time Automatique was the main part of information service (1). The breakthrough of the computer and information technologies in all segments of society led to the need for computer and information technologies. The knowledge of information technology is now part of general literacy. Computer literacy does not require comprehensive and detailed knowledge of electronics or programming.

Although the electronic computer is the invention of our age, the at- tempts of the construction of the first machine for the processing of information reach far in the history of human civilization. The only and global function of computer data processing can be naturally separated into a series of other elementary operations, as for examples are: ‘the follow-up of the data, their registration, reproduction, selection, sorting, and comparison’ and so on. The computers are classified according to ‘the purpose, type and computer size’. According to the purpose, computers can be for general and specific purposes. The computers for the general purpose server for commercial applications or any other application that is necessary (1).

With the penetration of contemporary informational technologies, the developed human communities have entered the informational or postindustrial society. In the techno polis or scientific centers, for example, are Tuskuba (by Tokyo) and Sicilian value in the USA, by the concentration of knowledge and teamwork, are being created the new generations of electronic computers and bio-computer. The previous development and the predictions of the new achievements have brought and are bringing enormous changes in the structure of the highly developed countries, both in the way of the production and changes in the structure of the personnel and in the methodologies and solving the problems and time orientations.

The basic characteristics of the informational society are that knowledge and information achieve the strategic and active resource of the converting and the development of such society, in the same way as the human work and the capital were for the industrial society. The informatics societies inspected the future, and not the past. In that sense, the new informational technologies become the basic intellectual technology in which the theoretic learning and new methods (“system analysis theory of the probability theory of the decision” and so on) connected with the possibilities of the computers become the essential factor of the further development of the society. From such coupling are born the new generations of computers, “the intelligent robots, the artificial intelligence and expert systems, the automatized production and offices, computer diagnostic and therapeutic systems and new software technologies” (1, 6).

As a consequence of the computer revolution and the revolution in telecommunication came political changes worldwide. So, today, the economy in the USA in the largest measure is founded on information; already by the beginning of the seventieth, about half of the working strength in the USA could be classified into the informational workers employed in the production, processing, and the distribution of informatics workers with employment in the production, processing, and distribution of information. On the other hand, the development of information comes to expression also the so-called “technologic colonization”, which reflects in the dictation of the way of the use the connected technology, engaging foreign experts, spying and wiretapping, and so on.

Recently it was recorded the expressive growth of the industry of computers. In the year 1987, is accounted that in the world there were 50 million computers but it is supposed that over 70% of the population use computers in their work. Only in the USA about 43% of the adult inhabitants nowadays use the Internet for their needs. The software industry has 1980 3 billion in dollars turnover, and growth per year step from 30%, while the companies which treat commercial applicative software realized in the 1981 year about 2.5 billion dollars of the income for its business. The telecommunication of services realized a turnover of about 4.6 billion dollars, and their annual year increase amounts to about 21%. In 1995 in the world was built about 600 million bigger phone power stations, and the value of the market the telecommunicate equipment amounts to about 500 billion dollars.

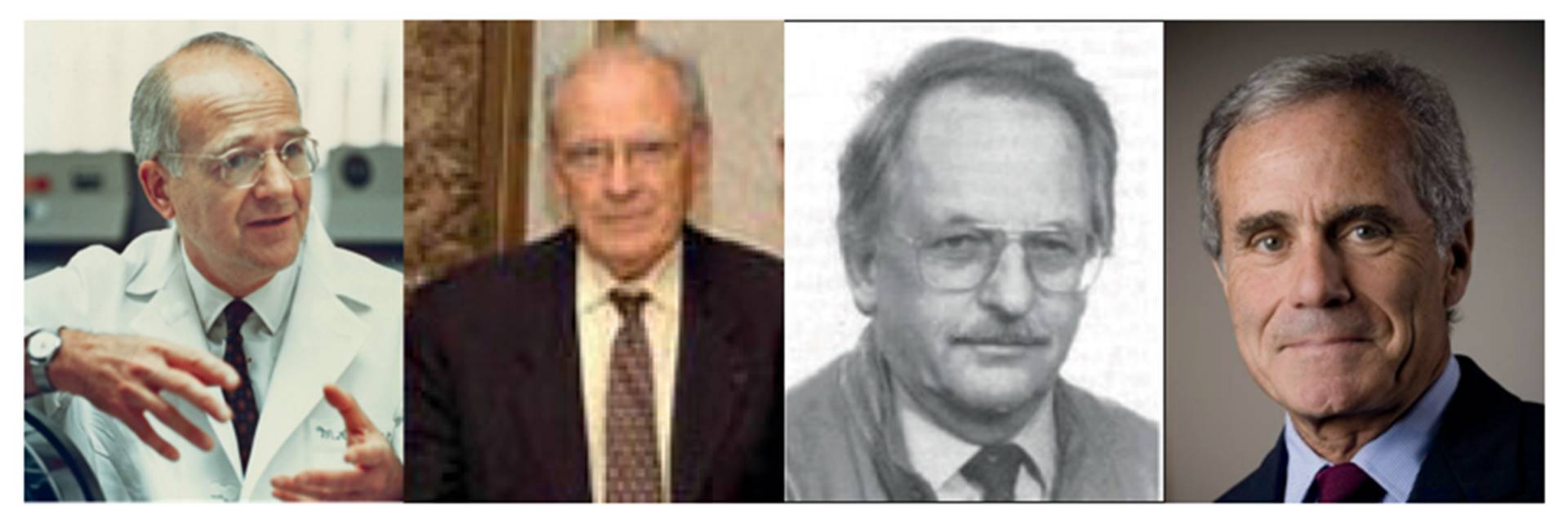

Peter L. Reichertz (1930-1987), Philippe Louis-Dreyfus (1925-2018)

Although the electronic computer was the invention of our age, the attempts of the construction of the first “machine for accounting” and for the processing of information reach far in the history of human civilization. The antecedents to this machine were the different kinds of documents as carriers of the data. From the beginning, the data were inscribed onto stone or on papyrus, then on paper, that by the ar- rival of electronics and informatics completely changed their nature. For mankind, it was the first technological revolution. If medical informatics is regarded as a scientific discipline dealing with the theory and practice of information processes in medicine, comprising data communication by information and communication technologies (ICT), with computers as an especially important ICT, then it can be stated that the history medical informatics is connected with the beginnings of computer usage in medicine.

WHAT IS MEDICAL INFORMATICS?

Medical informatics is the foundation for understanding and practice of up-to-day medicine. Its basic tool is the computer, the subject of studying and the means by which the aspects and achieve the new knowledge in the studying of a man, his health and disease, and functioning of the total health activities (1, 3, 6, 7). The name ‘medical informatics’ originated in Europe where it was first used by Francois Grémy and Peter Reichertz in 1973. The current network system possesses limited global performance in the organization of health care, and that is especially expressed in clinical medicine, where computer technology has not received the wanted applications yet.

In front of us lies the brilliant future of medical informatics. It should expect that the application of terminal and personal computers with more simple manners of operation will enable routine use of computer technology by all health professionals in the fields of telemedicine, Distance Learning – DL (Web-based medical education), application of Information and Communication Technologies (ICT), Medical robotics, Genomics, etc. The development of natural languages for communication with computers and the identification of input voice will make the work simpler.

Regarding the future of medical informatics education, there are numerous controversies. New experimental approaches (cell therapy, tissue engineering) raise important issues to be addressed by ICT in health. Informatics can substantially contribute to the advance of these fields. This knowledge must be applied not only at an individual level, in terms of patient care, but also to improve the health of populations, through new public health studies and programs.

The aggregation of electronic health records and informatics infrastructures to facilitate longitudinal and bio-bank-based association studies poses new opportunities for health informaticians (8). Everybody agrees that Medical informatics is very significant for the whole health care and for the needs of personnel. However, there is not yet a general agreement regarding the teaching pro- grams, because medical informatics is very involved and propulsive, which makes the performance of stable education programs more difficult.

Facts described in this chapter of the book could be a great help for accepting knowledge about the development of very propulsive biomedical scientific disciplines – Medical/ Health informatics, which is a big part of all medical disciplines and makes contents of the everyday practice of all medical professionals. Breakthrough of the computer and information technologies in all segments of society led to the need for computer and information technologies.

The knowledge of information technology is now part of general literacy. During his presentation at MIE 2012 Conference in Pisa prof Edward Ted Shortliffe proposed to use the term Biomedical Informatics in the future, regarding IMIA strategic plan for 2015: “Biomedical informatics is a multi-disciplinary area that involves multiple content areas. It is one of the fastest-growing subject/content areas in the world. The use of informatics is expected to enhance research efforts in areas such as genomics and proteomics, for example, and also to change the way medicine is practiced in the 21st century. Research in informatics ranges from theoretical to applied efforts. The demand for more research in biomedical informatics and for biomedical informatics to support other researchers escalates daily“ (8).

REFERENCES

- Masic I, Ridjanovic Medicinska informatika. Avicena. Sarajevo, 1994: 269-92.

- Masic Five Periods in Development of Medical Informatics. Acta Inform Med. 2014 Feb; 22(1): 44- 8. doi: 10.5455/aim.2014.22.44-48.

- Masic I, Ridjanovic Z, Pandza H, Masic Medical Informatics. Second edition. Avicena. Sarajevo, 2010: 13-36. ISBN 978-9958-720-39-0.

- Masic A History of Medical Informatics in Bosnia and Herzegovina. Avicena, Sarajevo, 2007: 3-26.

- Masic History of Informatics and Medical informatics. Avicena. Sarajevo, 2013: 5-40. ISBN 978- 9958-720-50-5.

- Kern J, Petrovecki i sur. Medicinska informatika. Medicinska naklada, Zagreb, 2009: 269-76.

- Bemmel van HJ, Musen AM. Handbook of Medical Informatics, Springer-Verlag, 1997: 37-51.

- imia.org

*This text is republished from the book “Contributions to the History of Medical Informatics” (Eds.: Masic I, Mihalas G.), Avicena, Sarajevo, B&H. 2014: ISBN: 978-9958-720-56-7, with permission of the Publisher. Some parts are corrected and edited by the Editor of this book.

Napomena o autorskim pravima: Dozvoljeno preuzimanje sadržaja isključivo uz navođenje linka prema stranici našeg portala sa koje je sadržaj preuzet. Stavovi izraženi u ovom tekstu autorovi su i ne odražavaju nužno uredničku politiku The Balkantimes Press.

Copyright Notice: It is allowed to download the content only by providing a link to the page of our portal from which the content was downloaded. The views expressed in this text are those of the authors and do not necessarily reflect the editorial policies of The Balkantimes Press.